During the current war in Gaza, unlike previous conflicts, one of the new threats that may affect the course of the war is the use of artificial intelligence (AI), for purposes such as disinformation, for example, in deep-fake videos by Hamas. It seems that at the same time as the battlefield continues on the ground, cyberspace also serves as a central battlefield.

The use of disinformation may have a dramatic effect on both decision makers and public opinion.

The deep-fake phenomenon was first popularized for its entertainment value and its private use by many users. Various deep-fake apps have allowed users to stamp faces on various characters or create videos for entertainment purposes. However, the potential of the advanced technology has not gone unnoticed by the terrorist organizations.

Various extremist groups have recognized the potential in planting synthetic data and thus to manipulate the information environment to serve their nefarious purposes. For example, the Indian militia called the “Resistance Front” (Tehreeki-Milat-i-Islami) used fake videos and photos to manipulate Indian youth.

Disinformation and deception have become powerful weapons with far-reaching consequences. In the digital age, the proliferation of social media platforms has made it significantly easier for malicious actors to manipulate public opinion, sow discord and undermine trust in democratic institutions and processes. By distributing fabricated visual content, these groups aim to incite violence, exacerbate existing tensions, and deliver extreme messages to their intended audience.

As part of the radicalization process, the offensive deep-fake is developing at an increased rate, and requires increased attention to deal with.

A striking example of this could be seen in a deep-fake video of the President of Ukraine, Vladimir Zelensky, in which he implores Ukrainian fighters to lay down their weapons, as can be seen in this link.

Moreover, even in the US and UK, deep-fake of political leaders is used to influence public opinion.

Hamas and its supporters have already used deep-fake in the current war. Especially on the subject of the hostages held in Gaza, the use of deep-fake made people think through social engineering that something happened that did not really happen.

These elements use viral content to shift public opinion – and such content is distributed without delay on various platforms, such as Telegram. In fact, the vast majority of deep-fake videos come from content groups created by Telegram users.

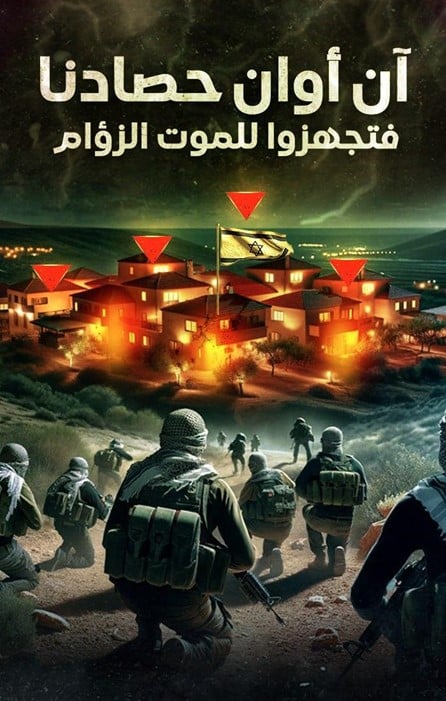

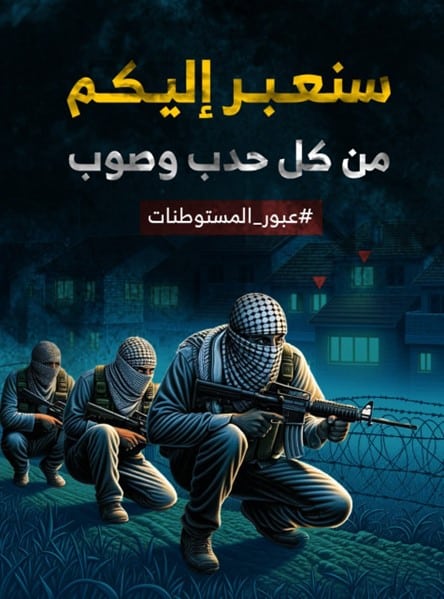

In addition to disinformation, Hamas also uses AI advanced graphics combined with artificial intelligence (AI) to spread its propaganda to incite many to carry out “lone wolf” attacks and also to garner support among the Palestinian public in particular, and in various countries in the world in general.

In many posts on Telegram channels, Hamas and its supporters distribute graphics by artificial intelligence to spread their extreme propaganda and calls to harm Israelis.

An example of this are advanced graphical designs based on artificial intelligence in which masked terrorists are seen aiming to attack an Israeli town, similar to what Hamas has done on the October 7 attacks.

Other examples are designs in which there is a clear call to carry out trampling attacks against Israelis or incitement to carry out “lone wolf” attacks as a response to IDF actions or to provoke additional combat arenas to the ones in Gaza.

Hamas is not the first organization to use artificial intelligence for propaganda purposes and to incite supporters for attacks. Other jihadist organizations, such as ISIS and Al Qaeda, preceded it and likewise, a lot of use of AI was also made by white supremacists’ groups.

Terrorists love technology

Jihadi factors were early adopters of developing technologies from the very beginning – as part of their belief that they should use Western advancement in order to defeat the West. This is how the former Al Qaeda leader, Osama bin Laden, used email to convey his plans for the September 11 attacks. This is how the former al-Qaeda most inspiring leader, Anwar al-Awlaki, used YouTube for outreach and message delivery, and even earned the nickname “Bin Laden of the Internet,” while recruiting an entire generation of followers in the West (Al-Awlaki, who was Yemeni-American, spoke to his followers in fluent English and distributed hundreds of video lectures on YouTube).

Throughout more than 20 years of using the Internet and social media, terrorist organizations have always looked for new ways to maximize their online activity to plan attacks, outsmart the West’s countermeasures, and recruit supporters. This is how AI entered as much as a game changer for them in their jihad war against the world.

The dark side of AI

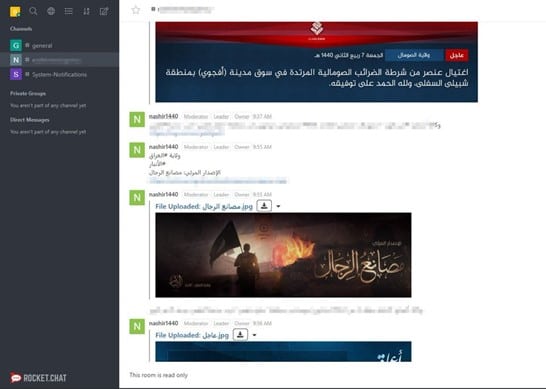

On December 6, 2022, a devout user of the ISIS-operated Rocket.Chat server, with a large number of followers, posted that he was using the free ChatGPT software for advice on supporting the Islamic Caliphate.

Two weeks later, other ISIS supporters expressed interest in another AI platform, ‘Perplexity Ask’, to create jihadi-promoting content. Another user shared his findings in a large discussion where users agreed that AI could be used to help the extremist jihadist organization.

Communication platforms, such as chat apps, have the potential to become powerful tools for terrorist actors to incite and recruit people. Using artificial intelligence algorithms, these platforms may send a tailored message that serves the interests of potential recruits.

The use of chatbots can normalize extreme ideologies and foster a sense of pertinence to various extreme groups.

Various terrorists can use the anonymity of these platforms to hide their identity while interacting with potential recruits. In addition, the multilingual capability of AI chatbots allows terrorists to reach a large audience around the world, overcome language barriers and expand their recruitment space.

Jonathan Hall KC, an independent reviewer on behalf of the British government on terrorism legislation, was interviewed by the British ‘Telegraph’ and said that he conducted an experiment on the website ‘Character.ai’, where conversations created by artificial intelligence can be conducted with chatbots created by other users.

Hall spoke to several bots allegedly designed to mimic the reactions of militant and other extremist groups. One even said it was “a senior leader of the Islamic State.” He said the bot tried to recruit him and expressed “complete dedication and devotion” to the extremist group. Hall said that as long as the messages were not created by a person, no crime was committed under British law. As part of the experiment, Hall was able to create his own (quickly deleted) chatbot, “Osama Bin Laden” with an “unbridled enthusiasm” for terrorism.

Hall’s experiment shows the ease with which AI tools can be used and the manner in which extremist elements can exploit AI tools in the future. His experiment follows growing concern about how extremists might exploit advanced AI in the future.

A report published by the British government in October 2023 warned that by 2025, generative artificial intelligence could be used “to gather knowledge about physical attacks by violent non-state actors, including for chemical, biological and radiological weapons.”

The rise of AI among terrorist organizations is not only a critical threat to national security, but is also a force multiplier for their destructive agenda.

The increase in the use of AI by terrorist organizations can be attributed mainly to the accessibility and affordable price of this innovative technology. In the past, access to AI was limited to state actors or multinational corporations. Today, however, the openness of this technology to the general public has made it easier for terrorists to acquire and use AI capabilities, even plan and execute attacks with more precision.

The Jihad Machine

The use of AI in the development of autonomous weapons poses significant dangers to both military and civilian populations. These AI-powered machines have the potential to make decisions on their own, focus and use lethal force without human intervention. This raises great concern that these weapons may be integrated into warfare by terrorist organizations.

This possibility creates a terrifying scenario in which terrorists could gain access to advanced technologies, perhaps in the future fully autonomous killer robots that are deployed in the field, without logistical and manpower constraints.

Use of drones by terrorist organizations

There have already been reports of terrorists experimenting with armed drones and other remotely controlled technologies. For example, it is known that ISIS uses drones equipped with explosives in its attacks.

In 2021, two drone-assisted explosions occurred at an Indian Air Force base in Jammu, which indicated the involvement of the Pakistani terrorist organization Lashkar-e-Taiba. The first explosion caused severe damage to a one-story building, while the second occurred in an open area on the ground. In the investigation of the drone attack, substantial information emerged regarding the nature of the attack. It was found that the terrorists used low drones to attack at night. This strategy prevented their discovery and allowed their penetration into the high security area of the Indian security system. The use of drones resulted in great accuracy in delivering explosive charges to their targets.

The Iranian-backed Hezbollah is known for its extensive use of drones, making it the violent non-state entity with the most extensive history of drone use. Hezbollah’s drone program is growing and developing. The organization now has a fleet of drones, which includes Iranian-made drones such as ‘Ababil’ and ‘Mirsad-1’ (an updated version of the early Iranian ‘Mohajar’ drone used for reconnaissance during the Iran-Iraq War of the 1980s).

Hamas, as part of the “Axis of Resistance” has long-standing strategic cooperation with Hezbollah and Iran that has expanded into the fields of technology. In 2021, a new threat from Hamas appeared when they released the video of the “Shahab” suicide drone, which has ammunition with built-in warheads.

Following similar patterns, ISIS began using drones in 2013. Unlike other terrorist organizations, neither advanced military capabilities nor government agencies contributed to ISIS’s drone program. The Salafist organization used easily accessible commercial technologies in a ‘do it yourself’ (DIY) manner. However, ISIS has developed a robust drone infrastructure, and has used it intensively. In January 2017, it was reported that ISIS published a statement in its weekly magazine, “Al-Nabaa” regarding the establishment of a new division called “The Unmanned Aircraft of the Mujahideen”. This specialized unit was established with the express purpose of promoting ISIS’s drone capabilities and integrating them into its operational strategies.

In conclusion, the chilling potential of AI in the hands of terrorists makes it even more important for the international community to work together and develop robust strategies to prevent the misuse of AI technologies.

Appearances of various and accessible AI technologies, such as deep-fake, chatbots and drones, for example, represent a step up in the propaganda and operational capabilities of the terrorist organizations in order to refine the messages, recruit supporters and carry out higher quality attacks.

We are at the beginning of a new era, in which the use of artificial intelligence is expanding to various areas in the world. The more accessible this technology is, the more convenient it is for terrorist organizations to use. In order to maintain the development of artificial intelligence technology in a friendly digital space – so that it will be a useful tool and not a threat in the physical space – intelligence and security agencies must surpass the capabilities of the terrorist organizations and upgrade the capabilities of counter terrorism in the new era.